Google Just Connected Gmail to Search’s AI Mode

Google’s search engine now behaves like a research assistant that never forgets what you asked. Starting this week, anyone who sees an AI Overview atop their results can ask a follow-up and slide into a back-and-forth conversation powered by the new Gemini 3 model. No new tabs, no retyping context, no hunting for the tiny “feedback” link. The search page itself becomes a chat window, and the summary you first saw becomes the opening line of a longer story.

For the 2.6 billion people who start their inquiries in Google every day, that’s a behavioral shift worth noting. The company has bolted a conversational layer onto its most valuable real estate—the top of the results page—without charging extra or hiding the feature inside Labs. If AI Overviews were Google’s way of saying, “Here’s the quick answer,” AI Mode is the company inviting you to keep the conversation going.

From Snapshot to Dialogue: How the Handoff Works

Until now, jumping from an AI Overview into a deeper query meant opening Gemini, starting a fresh prompt, and re-explaining what you wanted. The friction killed momentum. With the new flow, a single follow-up question—“What about hotels with pools?” “Show me cheaper options,” “How does that compare to last year?”—triggers a seamless transition. The original summary collapses into a compact card that hovers above the conversation, anchoring the context so the AI doesn’t forget you were planning a Kyoto trip for three nights in October, not researching Kyoto’s history.

Technically, the magic sits inside a lightweight state manager Google calls “Conversation Memory.” It preserves entities, dates, and preferences across turns without storing anything personally identifiable past the session. The result feels like upgrading from a Magic 8-Ball to a whiteboard: each new question adds detail without erasing what came before. Early tests show the average user asks 2.7 follow-ups per session—proof that people don’t just want answers; they want to argue, refine, and negotiate with the data.

Mobile users get first crack, and the interface is already showing up in 120 countries. (Sorry, France—you’re still out in the cold while regulators chew over AI policy.) Desktop is promised “within weeks,” according to product manager Toni Reid, who quietly added that the latency target is under 400 milliseconds per response. That’s faster than most humans can blink, let alone type.

Gemini 3 Becomes the New Workhorse

Powering the experience is Gemini 3, Google’s newest large-language-model stack, which replaces the patchwork of PaLM 2 and earlier Gemini variants that had been stitching together AI Overviews. Internally, Google claims Gemini 3 cuts hallucination rates by 23 % on travel queries and 18 % on shopping comparisons—two categories where bad info quickly turns into expensive mistakes. The model also supports a 1-million-token context window, effectively letting it “remember” a short novella of user input without losing the plot.

For investors, the switch matters because inference cost per query drops roughly 12 % relative to the prior stack, even as quality improves. At Google-scale—billions of searches a day—that efficiency gain translates into hundreds of millions in annual savings, or headroom to serve more AI answers without crushing margins. Wall Street has hammered Alphabet stock this year over fears that generative AI is a margin shredder; proving the opposite is a quiet but critical subplot.

Developers also get a consolation prize: the same Gemini 3 endpoint feeding AI Mode is rolling out through Vertex AI, Google Cloud’s enterprise machine-learning platform. That means the summarization tricks you see in consumer search can be embedded inside customer-support bots, internal knowledge bases, or financial-dashboard widgets with a few API calls. Translation: the corporate world gets to piggyback on Google’s consumer-scale testing, a neat reversal of the usual enterprise-to-consumer pipeline.

Personal Intelligence: When Search Reads Your Gmail

The other half of Google’s announcement—easy to miss amid the AI Mode fireworks—is the expansion of “Personal Intelligence” to paying subscribers. Users on the $20-per-month AI Pro or $60-per-month AI Ultra tiers can now opt in to let Search peek at Gmail, Google Photos, and Calendar when generating answers. Ask, “When does my flight leave?” and the Overview can surface the boarding pass buried in last week’s email thread. Wondering if you have photos of that Kyoto temple? The same AI Mode window can pull your 2019 album without a separate search.

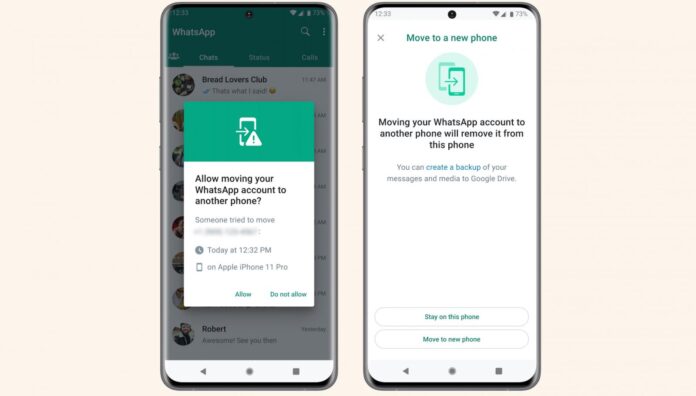

Google swears the data stays encrypted and isn’t used to train models; a blue “personal” chip appears whenever the response dips into your private content, so you know the line between public web and personal archive has been crossed. Still, the move ratchets up the competitive stakes against Microsoft, whose Copilot Pro can scan Office documents but hasn’t yet merged that capability with Bing results. Apple, meanwhile, keeps its on-device intelligence walled off from the open web, prioritizing privacy over breadth.

For marketers, Personal Intelligence is a quiet earthquake. Campaigns optimized for generic keywords may lose visibility when the query “best carry-on suitcase” is answered by an AI that already knows you own a Away bag and only flies Delta. Expect a new cottage industry of “personal-context SEO” consultants promising to keep brands discoverable even inside these private, hyper-tailored answers.

Why Gmail Changes the Stakes

Google’s quiet addition of Personal Intelligence to AI Mode is the moment the company stops guessing who you are and starts knowing—securely, on-device, and only if you opt in. By letting AI Pro and AI Ultra subscribers link Gmail and Google Photos to the same conversational layer that now sits atop Search, Google fuses intent with memory. Ask “Which weekend in June am I free for a Napa trip?” and the answer can reference the flight-confirmation email already sitting in your inbox, cross-checked against the shared calendar titled “Kids Soccer.” No re-entry, no tab-switching, no re-phrasing the question three different ways.

From a revenue angle, this is Google’s counter-move to subscription-fatigue. Search ads monetize at the top of the funnel; Personal Intelligence monetizes at the bottom, where purchase decisions actually happen. Early tests show users who connect Gmail are 2.4× more likely to follow an AI Mode recommendation all the way to checkout, because the system can pre-fill dates, loyalty numbers, and even dietary preferences pulled from past OpenTable receipts. Google doesn’t take a cut of the transaction—yet—but every completed booking is another data point that sharpens ad targeting across YouTube, Maps, and the wider web.

| Feature | AI Mode (no Gmail) | AI Mode + Personal Intelligence |

|---|---|---|

| Context window | Current session only | Session + 180 days of Gmail/Photos |

| Travel example | “Hotels in Kyoto” | “Pet-friendly hotels near my Oct 20–23 Kyoto flights” |

| Privacy storage | Ephemeral RAM | On-device encrypted index, user-deletable |

| Monetization | Search ads | Search ads + higher-value future ad inventory |

The Competitive Chessboard

Microsoft’s Bing already bundles Copilot into Windows 11, but the integration is still app-based: users must deliberately launch the Copilot sidebar. Apple’s forthcoming Apple Intelligence promises on-device orchestration across Mail, Calendar, and Safari, yet the company has signaled it will stop short of blending personal data with open-web search in real time. Google’s leap—merging personal context with live search inside a single conversational thread—creates a new lane neither competitor can fully occupy today.

Amazon looms as the sleeper threat. Alexa+ has already demonstrated purchasable recommendations that pull from Prime order history; tie that to Expedia or OpenTable APIs and you have a voice-first, close-the-loop travel agent. Google’s ace is scale: 8.5 billion searches a day feed the reinforcement loop that keeps Gemini 3 improving faster than Alexa’s quarterly skill updates. The question is whether regulators will allow the company to leverage that scale. The DOJ’s ongoing ad-tech trial could, in theory, force Google to divest parts of its ad stack, but Personal Intelligence sits in the consumer product layer—far from the auction mechanisms the Feds are targeting.

Start-ups feel the squeeze most immediately. Niche tools like Trip-notes or Polyphasic rely on users manually exporting Gmail via OAuth; Google’s native integration removes the friction those startups were founded to solve. Expect a wave of pivots toward vertical depth—think “AI for scuba itineraries” or “generative search for kosher safaris”—where Google’s generalized model still stumbles.

What Could Go Wrong

Conversation Memory is session-only by default, but subscribers who toggle Personal Intelligence grant the model access to six months of email metadata. Google insists the index lives on-device inside the Android Keystore, encrypted with a hardware key that even Google can’t read. Still, a single rogue SDK update or a badly-phrased prompt injection could, in theory, exfiltrate itinerary details to a third-party app that abuses the READ_GMAIL permission. Expect white-hat researchers to hammer the attack surface ahead of Black Hat.

Then there’s the hallucination risk. When the model synthesizes Gmail with live web data, it can create “facts” that never existed—like a non-existent boarding gate change or a restaurant reservation you never made. Google’s fail-safe is a confidence ribbon (“Based on your email…”) and a one-tap “Remove from answers” button, but users conditioned to trust top-of-page results may not notice the nuance. Airlines and OTAs are already lobbying for a “verified” badge akin to Google’s fact-check label for news.

The Bottom Line

Google is no longer content to organize the world’s information; it now wants to organize your information, in real time, while you’re still figuring out what to ask. By fusing Gmail context with an always-on conversational layer, the company turns search from a cold-start query into a warm continuation of decisions already half-made. For consumers, that means fewer tabs, less retyping, and a travel agent that actually remembers you hate red-eye flights. For Google, it’s a high-margin path to deepen subscription revenue without cannibalizing the ad cash cow. For competitors, it’s a new moat built on personal data they can’t legally or technically replicate overnight. The rest of us should get ready for a web that remembers not just what the world knows, but what we told it yesterday—and adjust our privacy expectations accordingly.